Ruixuan LiuI am a PhD student in the Robotics Institute, part of the School of Computer Science, at Carnegie Mellon University advised by Prof. Changliu Liu (Intelligent Control Lab). My research focuses on robot learning/manipulation/control, generative intelligence, and human-robot collaboration. I received my Bachelor’s degree in Electrical and Computer Engineering with a minor in Robotics at Carnegie Mellon University. During my undergrad, I was fortunate to work in the AirLab led by Prof. Sebastian Scherer. My work focused on sensor fusion and 3D reconstruction for structural inspection. Email / Google Scholar / CV / LinkedIn |

|

Highlights |

News

|

ResearchI'm interested in robotics, particularly in manipulation, generative intelligence, and human-robot collaboration. Please see the following list for my research works. |

|

Prompt-to-Product: Generative Assembly via Bimanual ManipulationRuixuan Liu*, Philip Huang*, Ava Pun, Kangle Deng, Shobhit Agarwal, Zhenran Tang, Michelle Liu, Deva Ramanan, Jun-Yan Zhu, Jiaoyang Li, Changliu Liu *Equal Contributions In part supported by CMU MFI Paper / Project Website / Youtube / We present Prompt-to-Product, a proof-of-concept physical AI system that transforms abstract human imagination into tangible physical creations. Users simply describe their ideas in natural language, and Prompt-to-Product interprets those descriptions to generate concrete, physically buildable assembly designs. The system then reasons and constructs the final product, brick by brick, bringing imagination seamlessly into reality. |

|

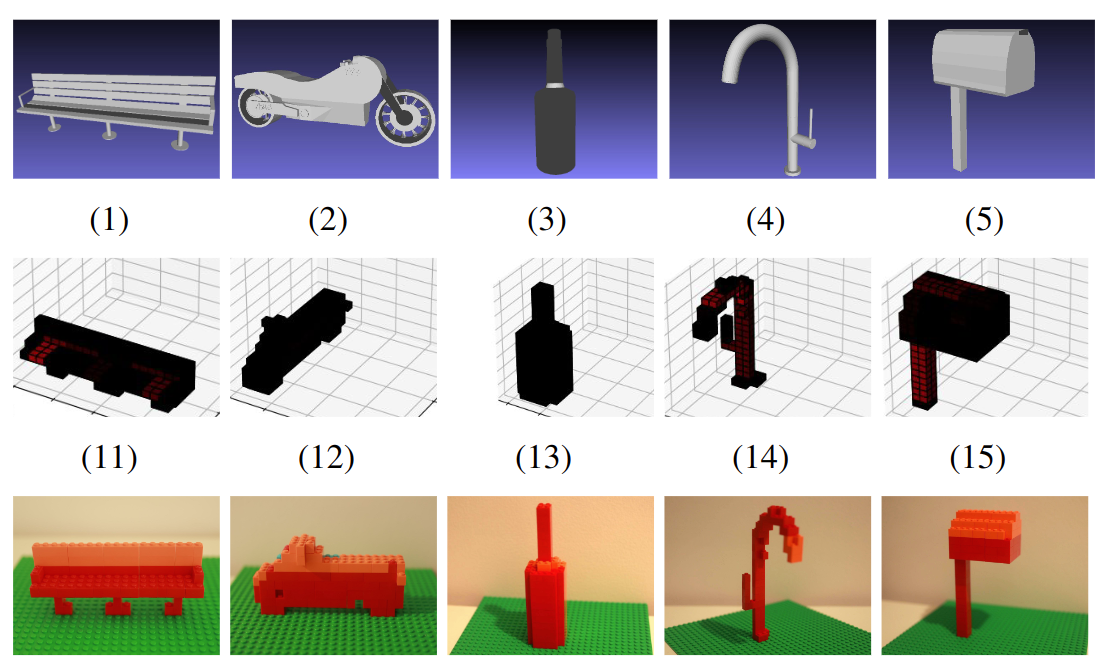

BrickGPT: Generating Physically Stable and Buildable Brick Structures from TextAva Pun*, Kangle Deng*, Ruixuan Liu*, Deva Ramanan, Changliu Liu, Jun-Yan Zhu *Equal Contributions International Conference on Computer Vision (ICCV), 2025 Best Paper Award (Marr Prize) In part supported by CMU MFI Paper / Project Website / X / BrickGPT generates physically stable and buildable assembly structures from user-provided text prompts in an end-to-end manner. While this work focuses on bricks, its broader significance lies in demonstrating a viable approach to incorporating physical understanding and manufacturing constraints into generative models. |

|

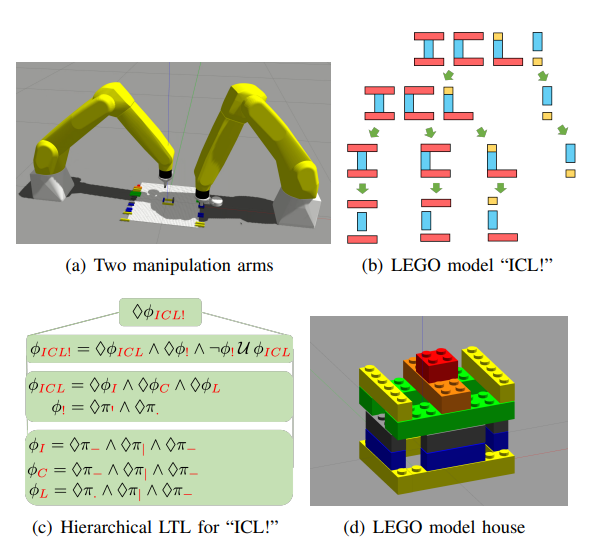

APEX-MR: Multi-Robot Asynchronous Planning and Execution for Cooperative AssemblyPhilip Huang*, Ruixuan Liu*, Shobhit Aggarwal, Changliu Liu, Jiaoyang Li *Equal Contributions Robotics: Science and Systems (RSS), 2025 Best Poster Finalist at 2025 ICRA workshop In part supported by CMU MFI Paper / Video / Project Website / X / This paper studies multi-robot coordination and proposes APEX-MR, an asynchronous planning and execution framework designed to safely and efficiently coordinate multiple robots to achieve dexterous cooperative tasks. APEX-MR significantly improves task efficiency, scales to large-scale/long-horizon tasks, is robust against uncertainty/contingencies, and ensures collaboration safety. We deploy APEX-MR to a bimanual system and present the first robotic system capable of performing customized LEGO assembly using commercial LEGO bricks. |

|

Physics-Aware Combinatorial Assembly Sequence Planning using Data-free Action MaskingRuixuan Liu, Alan Chen, Weiye Zhao, Changliu Liu IEEE Robotics and Automation Letters (RA-L), 2025 In part supported by CMU MFI Paper / Code / This paper formulates the combinatorial assembly sequence planning as a reinforcement learning problem. In particular, this work proposes an optimization-based physics-aware action mask to address the challenges due to the sim-to-real gap and combinatorial nature. The proposed physics-aware action mask effectively guides the assembly policy learning and ensures violation-free deployment by planning physically executable assembly sequences to construct goal objects. |

|

Robustifying Long-term Human-Robot Collaboration through a Hierarchical and Multimodal FrameworkPeiqi Yu*, Abulikemu Abuduweili*, Ruixuan Liu, Changliu Liu Paper / Video / Code / This paper introduces a multimodal hierarchical framework for efficient long-term human-robot collaboration, integrating visual observations and speech commands for intuitive interactions. Deployed on the KINOVA GEN3 robot, the framework is validated through extensive real-world user studies. |

|

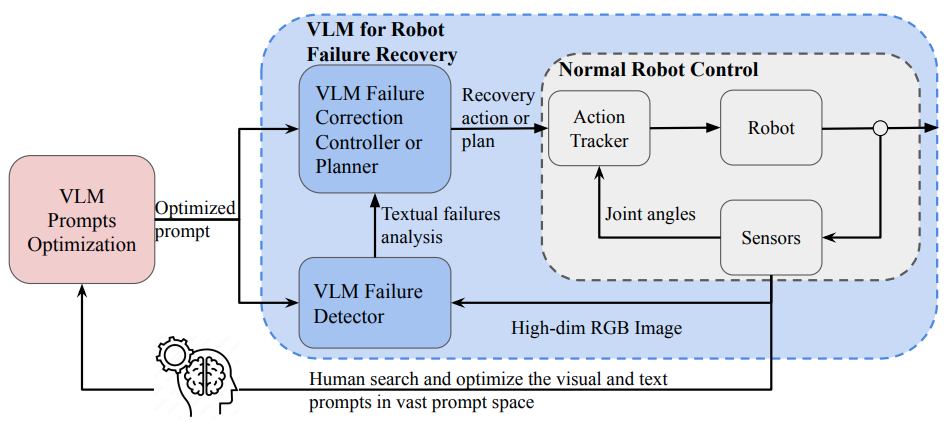

Automating Robot Failure Recovery Using Vision-Language Models With Optimized PromptsHongyi Chen, Yunchao Yao, Ruixuan Liu, Changliu Liu, Jeffrey Ichnowski American Control Conference (ACC), 2025 In part supported by CMU MFI Paper / Code / This paper investigates how optimizing visual and text prompts can enhance the spatial reasoning of VLMs, enabling them to function effectively as black-box controllers for both motion-level position correction and task-level recovery from unknown failures. Specifically, the optimizations include identifying key visual elements in visual prompts, highlighting these elements in text prompts for querying, and decomposing the reasoning process for failure detection and control generation. |

|

StableLego: Stability Analysis of Block Stacking AssemblyRuixuan Liu, Kangle Deng, Ziwei Wang, Changliu Liu IEEE Robotics and Automation Letters (RA-L), 2024 In part supported by CMU MFI Paper / Code / This paper proposes a new optimization formulation, which optimizes over force-balancing equations, to infer the structural stability of block stacking assembly. In addition, we provide StableLego: a comprehensive Lego assembly dataset, which includes a wide variety of Lego assembly designs for real-world objects. The dataset includes more than 50k Lego structures built using standardized Lego bricks with different dimensions along with their stability inferences generated by the proposed algorithm. |

|

Robotic Planning under Hierarchical Temporal Logic SpecificationsXusheng Luo, Shaojun Xu, Ruixuan Liu, Changliu Liu IEEE Robotics and Automation Letters (RA-L), 2024 In part supported by NSF Paper / Youtube / This paper formulates a decomposition-based method to address tasks under hierarchical temporal logic structure. Each specification is first broken down into a range of temporally interrelated sub-tasks. We further mine the temporal relations among the sub-tasks of different specifications within the hierarchy. Subsequently, a Mixed Integer Linear Program is utilized to generate a spatio-temporal plan for each robot. |

|

A Lightweight and Transferable Design for Robust LEGO ManipulationRuixuan Liu, Yifan Sun, Changliu Liu International Symposium on Flexible Automation (ISFA), 2024 In part supported by Siemens, CMU MFI Paper / Youtube / This paper investigates safe and efficient robotic LEGO manipulation. In particular, this paper reduces the complexity of the manipulation by hardware-software co-design. An end-of-arm tool (EOAT) is designed, which reduces the problem dimension and allows large industrial robots to easily manipulate LEGO bricks. In addition, this paper uses evolution strategy to safely optimize the robot motion for LEGO manipulation. |

|

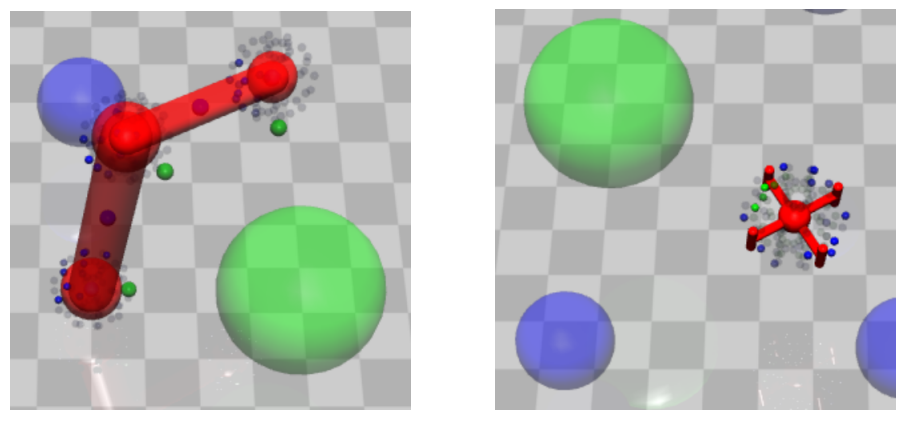

GUARD: A Safe Reinforcement Learning BenchmarkWeiye Zhao, Rui Chen, Yifan Sun, Ruixuan Liu, Tianhao Wei, Changliu Liu Transactions on Machine Learning Research (TMLR), 2024 In part supported by NSF Paper / Code / This paper introduces GUARD, a Generalized Unified SAfe Reinforcement Learning Development Benchmark. GUARD has several advantages compared to existing benchmarks. First, GUARD is a generalized benchmark with a wide variety of RL agents, tasks, and safety constraint specifications. Second, GUARD comprehensively covers state-of-the-art safe RL algorithms with self-contained implementations. Third, GUARD is highly customizable in tasks and algorithms. |

|

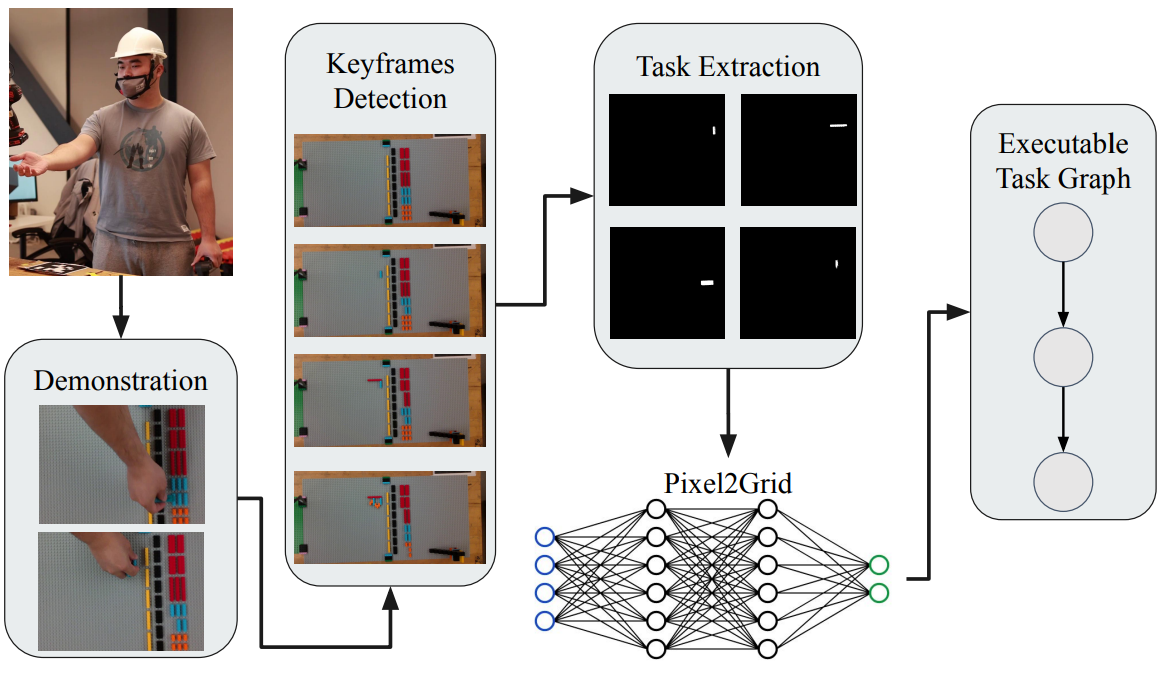

Simulation-aided Learning from Demonstration for Robotic LEGO ConstructionRuixuan Liu, Alan Chen, Xusheng Luo, Changliu Liu In part supported by Siemens, CMU MFI Paper / Youtube / This paper presents a simulation-aided learning from demonstration (SaLfD) framework for easily deploying LEGO prototyping capability to robots. In particular, the user demonstrates constructing the customized novel LEGO object. The robot extracts the task information by observing the human operation and generates the construction plan. A simulation is developed to verify the correctness of the learned construction plan and the resulting LEGO prototype. |

|

Robotic LEGO Assembly and Disassembly from Human DemonstrationRuixuan Liu, Yifan Sun, Changliu Liu ACC Workshop on Recent Advancement of Human Autonomy Interaction and Integration, 2023 In part supported by Siemens, CMU MFI Paper / Youtube / This paper studies automatic prototyping using LEGO. To satisfy individual needs and self-sustainability, this paper presents a framework that learns the assembly and disassembly sequences from human demonstrations. |

|

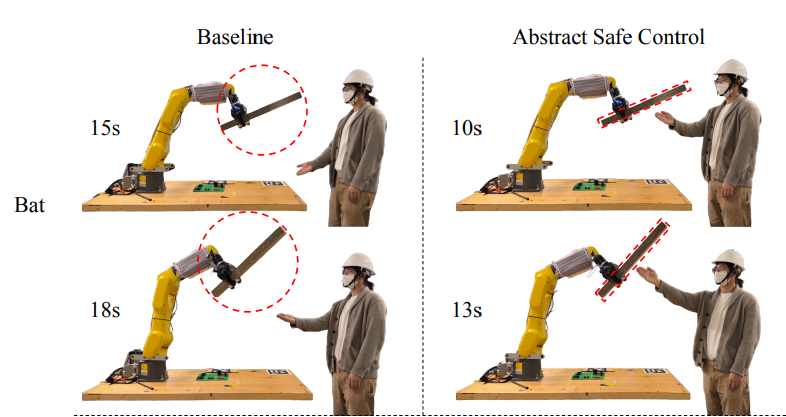

Proactive Human-Robot Co-Assembly: Leveraging Human Intention Prediction and Robust Safe ControlRuixuan Liu, Rui Chen, Abulikemu Abuduweili, Changliu Liu IEEE Conference on Control Technology and Applications (CCTA), 2023 In part supported by Ford Paper / Project Website / news / This paper presents an integrated framework for proactive HRC. A robust intention prediction module, which leverages prior task information and human-in-the-loop training, is learned to guide the robot for efficient collaboration. The proposed framework also uses robust safe control to ensure interactive safety under uncertainty. The developed framework is applied to a co-assembly task using a Kinova Gen3 robot. |

|

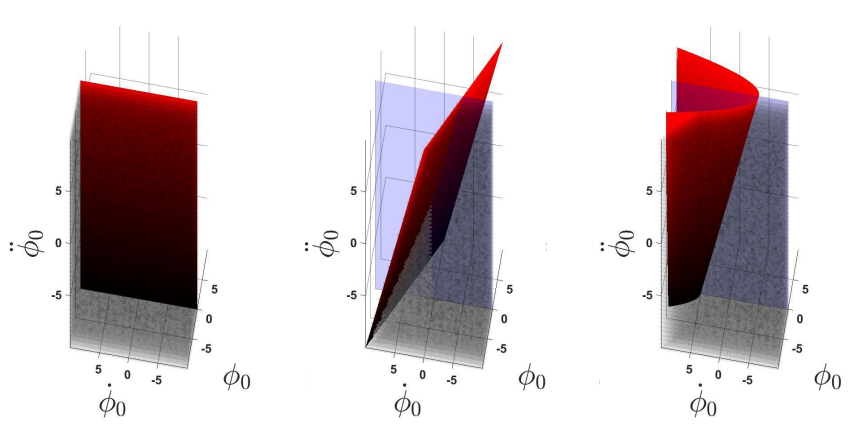

Zero-shot Transferable and Persistently Feasible Safe Control for High Dimensional Systems by Consistent AbstractionTianhao Wei, Shucheng Kang, Ruixuan Liu, Changliu Liu IEEE Conference on Decision and Control (CDC), 2023 In part supported by NSF Paper / This paper proposes a system abstraction method that enables the design of energy functions on a low-dimensional model. Then we can synthesize the energy function with respect to the low-dimensional model to ensure persistent feasibility. The resulting safe controller can be directly transferred to other systems with the same abstraction, e.g., when a robot arm holds different tools. |

|

Task-Agnostic Adaptation for Safe Human-Robot HandoverRuixuan Liu, Rui Chen, Changliu Liu IFAC Workshop on Cyber-Physical and Human Systems (CPHS), 2022 Best Student Paper In part supported by Siemens Paper / Video / Project Website / This paper proposes a task-agnostic adaptable controller that can (1) adapt to different lighting conditions, (2) adapt to individual behaviors and ensure safety when interacting with different humans, and (3) enable easy transfer across robot platforms with different control interfaces. |

|

Safe Interactive Industrial Robots using Jerk-based Safe Set AlgorithmRuixuan Liu, Rui Chen, Changliu Liu International Symposium on Flexible Automation (ISFA), 2022 In part supported by Siemens, Ford Paper / Project Website / Youtube / This paper introduces a jerk-based safe set algorithm (JSSA) to ensure collision avoidance while considering the robot dynamics constraints. The JSSA greatly extends the scope of the original safe set algorithm, which has only been applied for second-order systems with unbounded accelerations. The JSSA is implemented on the FANUC LR Mate 200id/7L robot and validated with HRI tasks. |

|

Jerk-bounded Position Controller with Real-Time Task Modification for Interactive Industrial RobotsRuixuan Liu, Rui Chen, Yifan Sun, Yu Zhao, Changliu Liu IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), 2022 In part supported by Siemens Paper / Project Website / Youtube / This paper presents a jerk-bounded position control driver (JPC) for industrial robots. JPC provides a unified interface for tracking complex trajectories and is able to enforce dynamic constraints using motion-level control, without accessing servo-level control. Most importantly, JPC enables real-time trajectory modification. Users can overwrite the ongoing task with a new one without violating dynamic constraints. |

|

Iterative Adversarial Data AugmentationRuixuan Liu, Changliu Liu ICML Workshop on Human in the Loop Learning, 2021 In part supported by Ford Paper / This paper proposes an iterative adversarial data augmentation (IADA) framework to learn neural network models from an insufficient amount of training data. The method uses formal verification to identify the most “confusing” input samples, and leverages human guidance to safely and iteratively augment the training data with these samples. |

|

Human Motion Prediction using Adaptable Recurrent Neural Networks and Inverse KinematicsRuixuan Liu, Changliu Liu IEEE Control Systems Letters, 2021 In part supported by Ford Paper / Project Website / Youtube / This project focuses on predicting human arm motion for assembly tasks. In particular, online adaptation techniques are used to reduce prediction error due to model mismatch and data scarcity. In addition, a physical arm model is adopted to generate physically feasible motion prediction. |

|

Automatic Onsite PolishingRuixuan Liu, Weiye Zhao, Suqin He, Changliu Liu In collaboration with Siemens, Yaskawa Motoman In part supported by ARM Institute Project Website / Youtube / Conventional weld bead removal is manually performed by human workers, which is time-consuming, expensive, and more importantly, dangerous, especially in a confined environment, such as inside a metal tube. This project develops a robotic solution for polishing and grinding complex surfaces in a confined workspace to alleviate the cost and manual effort. |

Award

|

ServiceReviewerfor

|

TeachingTeaching assistant for 16-720: Computer Vision. Instructor: Prof. Srinivasa G. Narasimhan. Teaching assistant for 16-811: Math Fundamentals for Robotics. Instructor: Prof. Michael Erdmann. Teaching assistant for 18-202: Mathematical Foundations of Electrical Engineering. Instructor: Prof. José Moura. |

Other ProjectsThese include courseworks and side projects. |

|

Lidar Obstacle DetectionRuixuan Liu Internship at Zenuity youtube / This was an intern project during my internship at Zenuity in the summer of 2019. The team was trying to build a mobile robot that can manuever in the office automatically. There were many modules to implement, including path planning, motion control, safety, etc. I was working on the Lidar perception module. The goal was to use the Lidar to detect the obstacles and generate the free area that was traversable for the robot. |

|

Basketball RetrieverRuixuan Liu, Alvin Shi, Roy Li ECE Capstone: 18-500 youtube / This was the capstone project I did during my undergrad. The idea was to build a collaborative mobile robot that helps the basketball player during practice. When a player is practicing shooting, the ball would bounce to random places if missed the shot. It is sometimes frustrating and time-consuming to get the ball back. Therefore, in this project we intend to build a mobile robot that can retrieve the ball each time when it bounces away. We would like to thank Prof. Tamal Mukherjee for his guidance and support. |

|

Real-time Object TrackingRuixuan Liu, Alvin Shi Computer Vision: 16-720 youtube / This was a course project for 16-720: Computer Vision. The goal for this project was to build a pipeline that could robustly track a desired object in real-time. |

|

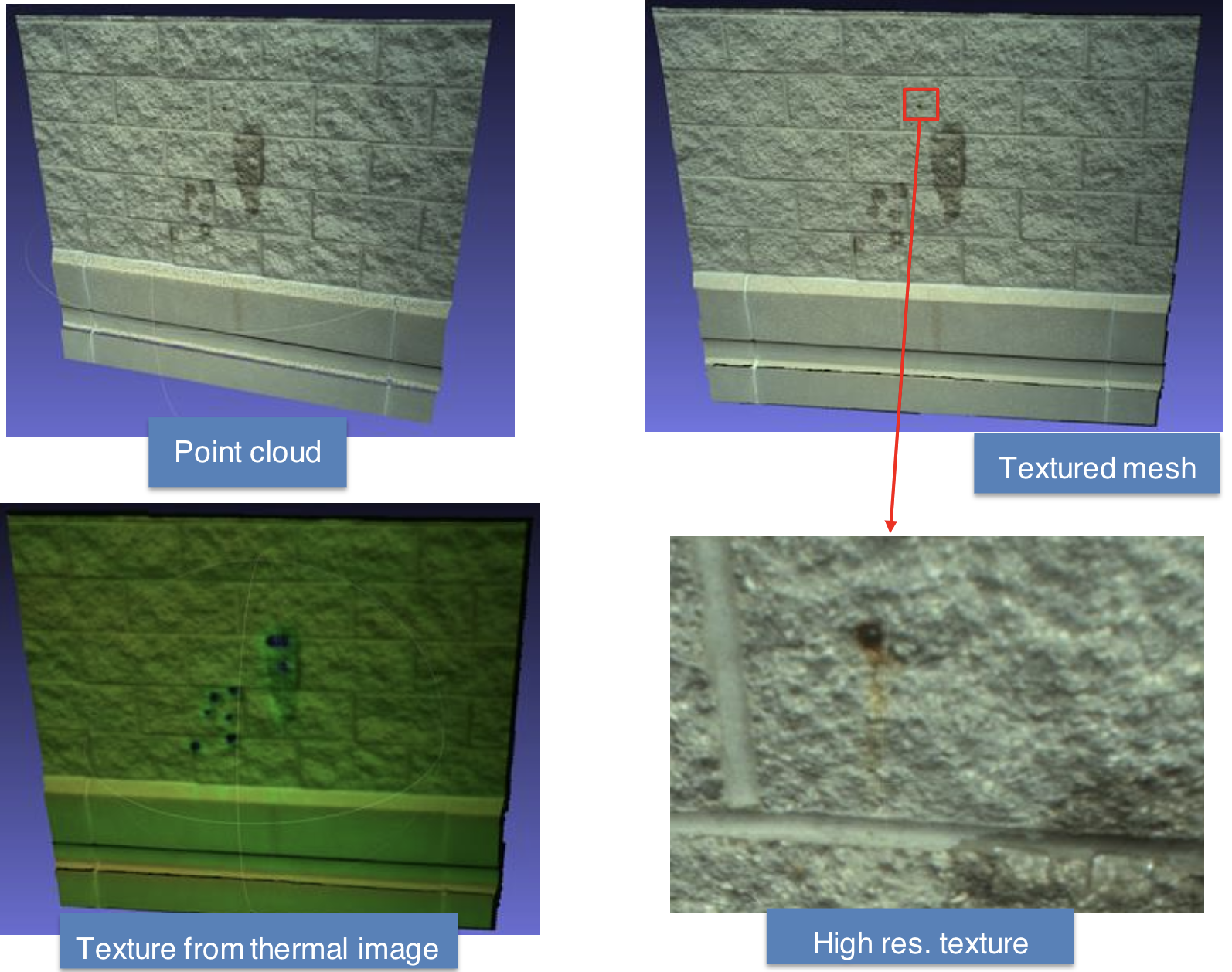

3D Reconstruction with Thermal TextureRuixuan Liu, Henry Zhang, Yaoyu Hu Internship at the AirLab website / This was a research project that I did during the internship in the AirLab led by Prof. Sebastian Scherer. The goal for this project was to enable building inspection from 3D reconstruction. We want to add thermal texture to the 3D reconstruction to make the inspection more reliable. |

|

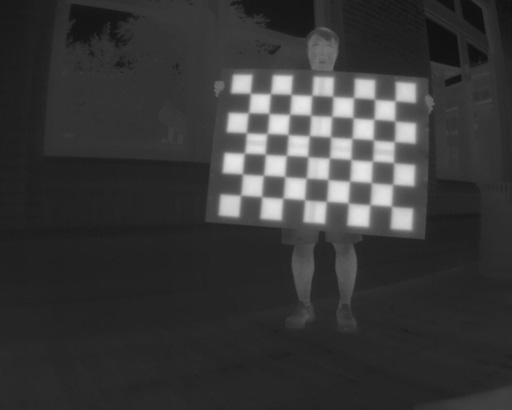

Methods of Thermal Camera CalibrationRuixuan Liu, Henry Zhang, Sebastian Scherer Internship at the AirLab paper / website / This was a research project that I did during the internship in the AirLab led by Prof. Sebastian Scherer. |

|

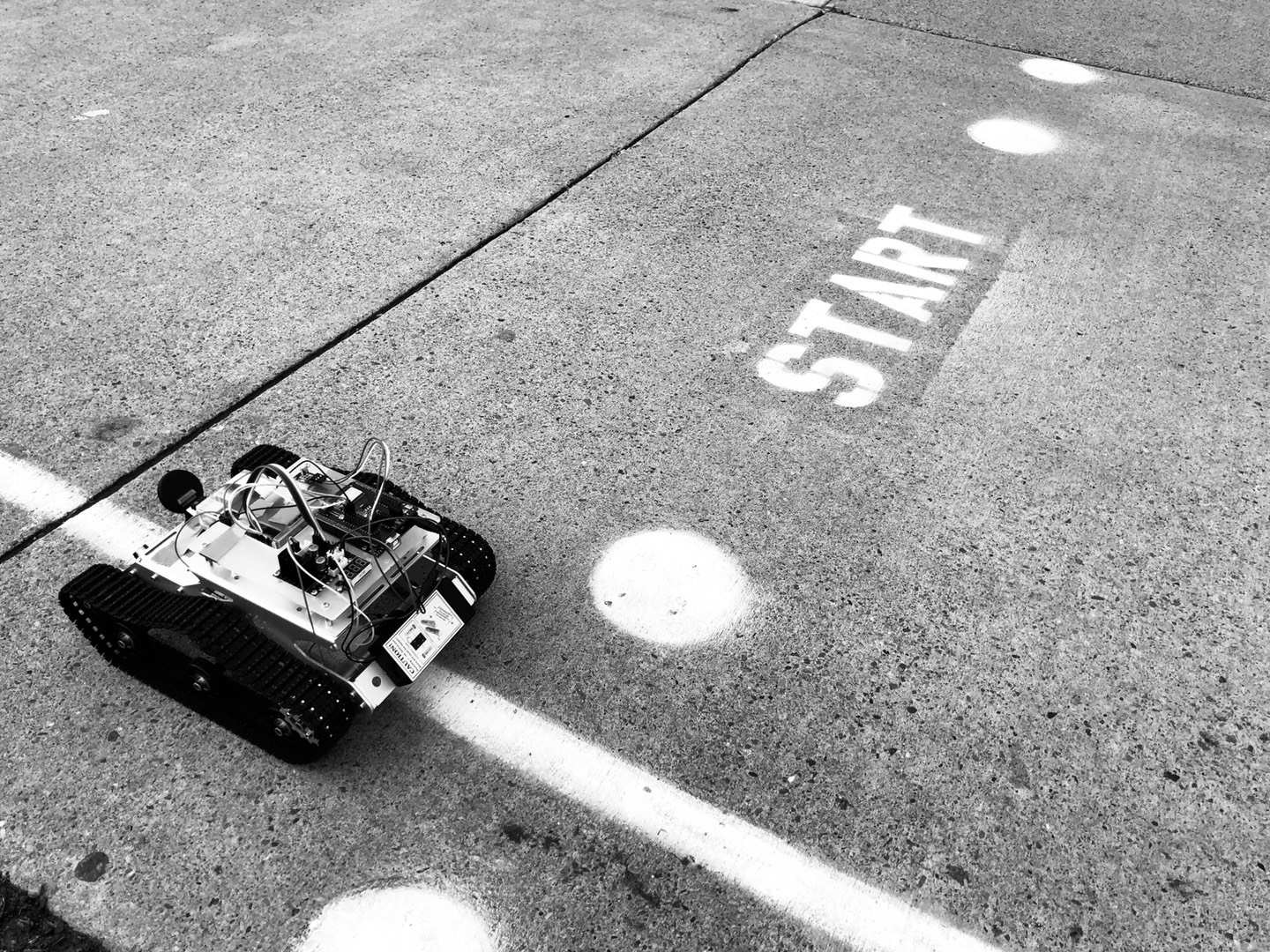

Line Following TankRuixuan Liu, Henry Zhang, Alvin Shi Third place CMU Mobot Competition website / youtube / This was a project for the CMU Mobot competition in 2018. This event is an annual competition held by the School of Computer Science. The goal is to build a mobile robot that follows a track in front of Wean Hall. There are small gates representing checkpoints placed along the track, and the robot is required to go through the gates. So the robot should strictly follow the line instead of go directly to the goal point. We got the third place in the end. |

|

Gesture DroneRuixuan Liu, Henry Zhang, Alvin Shi CMU Build18 Hackathon website / This was a project for the CMU Build18 hackathon in 2018. The idea was to use simple, intuitive hand gestures to control a drone. We built a drone from scratch and modified the onboard controller to take customized hand gesture input. |

|

Smartphone Controlled DroneChris Yin, Ruixuan Liu Internship at HIT youtube / This was a project that I did during the internship in the lab led by Prof. Xiaorui Zhu. The goal for this project was to achieve stable UAV control based on smartphone motion sensing to make the control method intuitive. This project was collaborated with Chris Yin. |

|

Design and source code from Jon Barron's website |